Cognitive Tests Taken at Home Are on Par with In-Clinic Assessments

Quick Links

Can a cognitive test taken on a computer, in the comfort of one’s home, tell neurologists as much as a pencil-and-paper test done in a clinic? Yes, according to two researchers who presented their work at the Clinical Trials on Alzheimer’s Disease conference, held November 29 through December 2 online and in San Francisco. Jessica Langbaum of the Banner Alzheimer's Institute in Phoenix reported that scores from five cognitive tests given over video chat tightly correlated with scores of those same tests given face-to-face. Likewise, Paul Maruff of the Australian neuroscience technology company CogState found that people who had mild cognitive impairment or preclinical AD performed similarly on in-person and remote versions of two cognitive tests. Knowing that both testing modalities are equal is crucial as clinical trials move to decentralized designs.

- CDR, MMSE, ADAS-Cog, iADRS, and ADCS-ADL tests worked similarly at home and in the clinic.

- Computerized PACC tasks detected faulty cognition as accurately as did their paper-and-pencil counterparts.

- Multiple ongoing clinical trials use remote cognitive tests as endpoints.

“This is very important work, and we need to continue validating remote testing for clinical use and trials,” wrote Dorene Rentz, Massachusetts General Hospital, Boston (comment below).

Previously, researchers had found that new memory tests given via smartphone correlated with scores of in-clinic assessments (Nov 2020 conference news). Ditto for the first attempt at moving the clinical dementia rating (CDR) online (Dec 2021 conference news).

Now, Langbaum also found that the CDR and other commonly used cognitive tests, when completed through a computer screen, detected dementia as well as did tests administered face-to-face. She compared in-clinic to online administration of five tests: the CDR sum of boxes (CDR-SB), Mini-Mental State Exam (MMSE), Alzheimer’s Disease Assessment Scale–Cognitive Subscale 13 (ADAS-Cog13), integrated Alzheimer’s Disease Rating Scale (iADRS), and Alzheimer’s Disease Cooperative Study–Activities of Daily Living (ADCS-ADL).

Langbaum tested 82 people who had MCI or mild-to-moderate AD from the TRAILBLAZER cohort after they finished taking the study drug donanemab. Half of the participants were guided through the cognitive tests at first in-person and then over video chat four weeks later; half did the opposite. For the remote assessments, participants received a paper-and-pencil version of the cognitive tests that have written components, a laptop, a device that provides Wi-Fi, and a wide-angle web camera. The camera helped the test raters ensure participants did not write down answers and had no distractions while completing the at-home tests. Then the participants were trained how to use each device. “This work showed that you can distribute complex technology and rely on folks to set it up and use it, then get good-quality data,” Maruff wrote to Alzforum.

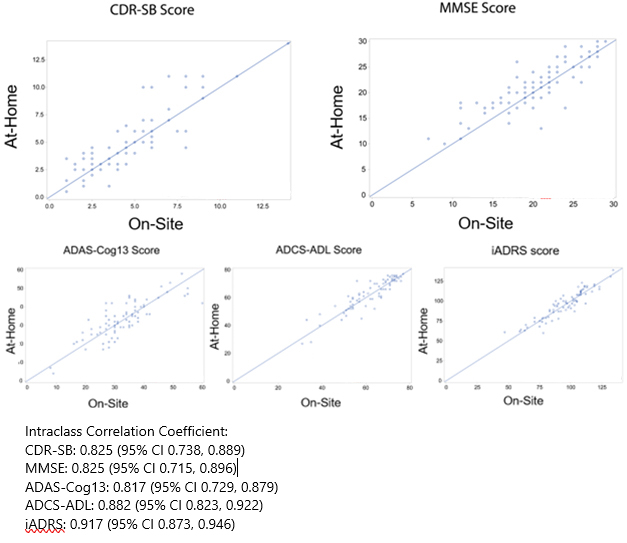

On all five tests, remote and in-clinic scores strongly correlated, with coefficients between 0.82 and 0.92 out of a perfect 1.0 (see image below). Langbaum concluded that these at-home and in-person cognitive tests were equivalent.

Big 5 Good To Go. In-clinic (x-axis) and remote (y-axis) scores on the CDR-SB, MMSE, ADAS-Cog13, ADCS-ADL, and iADRS correlated closely. [Courtesy of Jessica Langbaum, Banner Alzheimer’s Institute.]

Maruff found a similarly strong correlation between in-clinic and remote CDR-SB scores from people who had MCI, with a correlation coefficient of 0.86 (see image below). He also saw close alignment of in-person and computerized tasks from the Preclinical Alzheimer’s Cognitive Composite (PACC) in people who are cognitively intact but have amyloid plaques. “Unlike the CDR, the PACC requires real-time interactive administration with visual-manual requirements, such as the digit-symbol substitution test, so our work focused on how such tasks could be administered and scored over video chat,” Maruff told Alzforum.

Maruff and colleagues studied 31 people who had preclinical AD and 23 who had prodromal AD from the Australian Imaging, Biomarker, and Lifestyle (AIBL) and Australian Dementia Network (ADNeT) cohorts. Half completed the in-person tests and then the remote assessments two weeks later; half did the remote then in-clinic tests.

During the at-home assessment, the participant video chatted with a test rater who verbally administered the CDR-SB and PACC international shopping list test. Then, the rater guided the participant through computerized versions of two more PACC tasks: the digit-symbol substitution test and the visual paired association learning test. For these, the participant completed example tasks before the real ones, with the computer advancing only after the participant got the task correct. Results from the three PACC tasks were summed to give the total score.

Digital and pen-and-paper PACC scores strongly correlated, yielding coefficients of 0.81 and 0.91 for preclinical and prodromal AD, respectively (see image below). Maruff concluded that, whether administered remotely or in person, the PACC tasks and CDR-SB can sense subtle cognitive impairment.

In Sync. In people with prodromal AD (black, left), CDR-SB scores from the remote (x-axis) and in-person (y-axis) versions correlated tightly. Ditto for remote and in-clinic scores on the PACC (right) in both prodromal and preclinical (open circles) AD. [Courtesy of Paul Maruff, CogState.]

The correlation of digital and face-to-face cognitive tests also held for people who had frontotemporal dementia. Adam Staffaroni of the University of California, San Francisco, had previously reported that scores on in-clinic executive function and spatial memory assessments from about 200 people with FTD correlated with digital tasks taken on the ALLFTD mobile app (Jun 2022 conference coverage). At CTAD, he showed the same correlations with scores from almost 300 people.

Staffaroni further showed that digital cognitive scores tracked with brain atrophy. Poor performance on the smartphone executive function or memory tasks correlated with smaller frontoparietal/subcortical or hippocampal volumes, respectively, hinting that these digital tasks reflect changes in the brain areas that control each aspect of cognition.

Way of the Future

Knowing that remote and on-site cognitive tests are equally informative is crucial as clinical trials move toward decentralized designs. For example, TRAILBLAZER-ALZ3, a Phase 3 trial testing donanemab in cognitively normal older adults with brain amyloid, is using tests administered by video call as primary and secondary endpoints (Jul 2021 news; Nov 2021 conference news). Langbaum told Alzforum that, by showing that cognitive tests are equivalent when delivered from home, remote assessments can be used in TRAILBLAZER-ALZ3 and satisfy FDA requirements.

Other trials are also taking a decentralized approach. The latest iteration of the Alzheimer’s Disease Neuroimaging Initiative, ADNI4, will use online recruitment and remote blood biomarker screening to enroll participants (Oct 2022 news). So, too, will AlzMatch, a study determining if a blood sample collected at a local laboratory can help speed AD trial recruitment.

In her CTAD poster, Sarah Walter of the University of Southern California, Los Angeles, described an ongoing AlzMatch pilot study. She and colleagues asked 300 people enrolled in the Alzheimer Prevention Trials (APT) webstudy, an online registry of cognitively normal adults ages 50 to 85, to go to their local Quest Diagnostics for a blood draw. Samples are sent to C2N Diagnostics in St. Louis to measure their plasma Aβ42/40 concentration. Scientists at USC’s Alzheimer’s Therapeutics Research Institute (ATRI) then run each person’s biomarker result through an algorithm they developed to determine eligibility for further screening. ATRI researchers call each participant to tell them their result and whether they are eligible for a trial, then connect those interested in participating with a nearby clinical trial site.

So far, 142 participants have agreed to get their blood drawn, 68 went to have it done, and 22 have biomarker results from C2N. ATRI researchers are now referring the latter to research centers.

Walter said 47 percent of the participants responded to the invitation with similar response across demographic groups, i.e. age, ethnicity, race, and years of education. “By making research accessible in local communities, we hope to expand access to people who have a hard time traveling to academic centers,” Walter wrote to Alzforum. In toto, she and her team hope to enroll 5,000 people into this study. —Chelsea Weidman Burke

References

News Citations

- Learning Troubles Spied by Smartphone Track with Biomarkers

- Bringing Alzheimer’s Detection into the Digital Age

- Digital Biomarkers of FTD: How to Move from Tech Tinkering to Trials?

- Can Donanemab Prevent AD? Phase 3 TRAILBLAZER-ALZ3 Aims to Find Out

- Donanemab Phase 3 Puts Plasma p-Tau, Remote Assessments to the Test

- In Its Latest Iteration, ADNI Broadens Diversity

Therapeutics Citations

External Citations

Further Reading

Annotate

To make an annotation you must Login or Register.

Comments

Brigham and Women’s Hospital

This is very important work. We need to continue to validate remote testing for both clinical use and for clinical trials. The correlations between in-clinic and remote testing are reasonable. I have found the same findings between remote and in-clinic testing, and our group at the Harvard Aging Brain Study has also been successful in demonstrating adequate correlations between at-home remote testing and in-clinic testing.

Being able to successfully measure cognition multiple times over the course of a year rather than one time in-clinic will provide a closer approximation to real performance than we currently have.

Screen, Inc.

Much like the research upon exercise and changes in cognitive ability, the at-home testing in research is extremely different from the at-home testing done without research supervision. The presence of a spouse during testing invalidates many, if not most, at-home computer testing. There is no need for staff training or even presence during testing in a doctor's office, and valid longitudinal comparisons require such a controlled environment.

Disclosure: I founded the CANS-MCI (Computer Administered Neuropsychological Screen for Mild Cognitive Impairment), which is only given in controlled testing environments.

Make a Comment

To make a comment you must login or register.