Try This at Home: Cognitive Testing in the Age of Prevention Trials?

Quick Links

As Alzheimer’s treatment pushes into ever-earlier disease stages, researchers need better ways of finding people who are at risk for cognitive decline. They also need better ways of cheaply tracking cognition in those people over multi-year trials. At the 7th Clinical Trials on Alzheimer’s Disease (CTAD) conference, held November 20 to 22 in Philadelphia, speakers discussed how to move cognitive testing into people’s homes to meet these needs. In the future, Internet tests and perhaps even games played by hundreds of thousands of gamers may flag those whose cognition is beginning to wane ever so subtly, potentially helping researchers recruit for prevention trials. Data from one study indicated that because this method picks out people who are already declining, it could boost the statistical power for detecting a preventive effect. A validation study determined that cognitive tests taken at home on an iPad gave the same results as those done under supervision at a clinic. That could allow for more frequent testing and better sensitivity to changes. Another researcher introduced a questionnaire that a patient or caregiver can complete at home in five minutes and that allows clinicians to generate a valid Clinical Dementia Rating (CDR) score. These new methods form part of a wave of innovation in Alzheimer’s trials (see Part 1 of this series).

For patients enrolled in trials, frequent clinic visits are burdensome. They are a major reason why participants drop out of trials, particularly as the patient declines and clinic visits overwhelm the caregiver. They also drive up costs related to clinic use and staff to supervise testing. Home-based testing could lift some stress off participants’ shoulders and make long, large prevention studies more economically feasible. But can tests taken at home reliably measure cognition? Distractions might arise during testing, or older adults might make mistakes without a test administrator to guide them. In practice, these tests actually perform quite well, said Michael Weiner of the University of California, San Francisco. UCSF runs the Brain Health Registry, which six months after starting up has enrolled some10,000 adults from the Bay Area and around the world. About half the registrants are older than 60, and many have a family history of Alzheimer’s, Weiner said. UCSF partnered with Cogstate to make some of its card-based cognitive tests available for use on the site.

At CTAD, Weiner spoke about analyzing data from 3,500 registrants who took those tests. He compared the mean and standard deviation scores for each age range to the values that Cogstate records in clinical trials. The numbers were so close, Weiner told Alzforum, that at first he accused his statistician of mixing up the data. In addition, people who took the tests at home were equally likely to finish all the tasks as those who tested in a clinic. Most participants reported that they find the home environment more relaxing, Weiner added. He found that registrants with subjective memory complaints or a family history of AD scored lower than age-matched peers. The data suggest that at-home testing can be as accurate as that done in the clinic, he noted.

So far so good. But is there a way to reach much larger numbers of older adults? The brain-training company Lumosity, based in San Francisco, California, has many millions of users who play its online games, providing a potentially rich source of cognitive data (see Jun 2014 news story). Weiner collaborated with the company to analyze data collected from people who play its Memory Match game, which is designed to test visual working memory. The game is not a validated cognitive test, Weiner cautioned, and the data are noisy, to say the least. They present many analytical challenges: People play intermittently, with long variable gaps between sessions, making it hard to compare scores from different players. Nonetheless, Weiner and colleagues believe that they can calculate a “learning rate” based on how much a person’s play improves on consecutive sessions closely spaced in time.

The researchers analyzed de-identified data from some 2,200 people aged 40 to 79 who had played at least 40 game sessions over about one year’s time. They found a large age effect, whereby older people posted lower initial game scores and learned at a slower rate. Nonetheless, the over-60 group did not decline noticeably over the course of the study period. This matches findings from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) and a large body of cognitive aging research, where healthy controls do not decline cognitively over one or two years, Weiner noted.

Among these older adults, however, there were some whose learning rate dropped during the study. Based on their average rate of decline, Weiner estimated that a one-year study enrolling this group would need 202 people per arm to detect a 25 percent slowing of cognitive decline due to treatment. Thus, recruiting from this population could lower the sample size needed in a preventative trial, Weiner claimed. Such a method of recruitment could be cheap and provide access to a large pool of potential trial volunteers. “This is a proof of concept that using the Internet to assess cognition in longitudinal datasets can be useful in neuroscience research,” Weiner said.

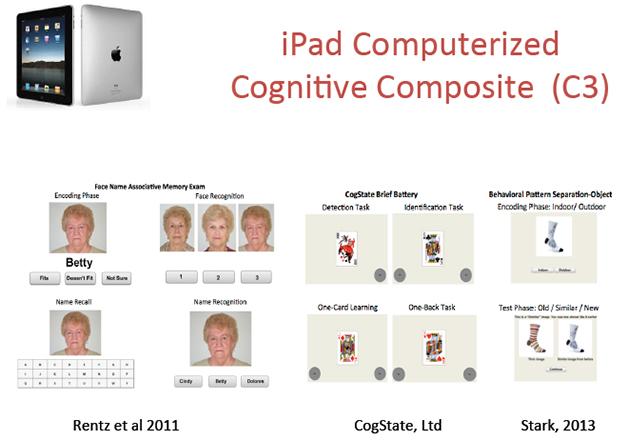

Can cognitive tests taken at home on an iPad do away with frequent trips to the memory clinic? A validation study of face-name, card-sorting, and pattern-separation tests suggests they can. [Image courtesy of Dorene Rentz, Brigham and Women’s Hospital.]

But would tests taken at home be a practical, reliable way to collect cognitive data? An early study using technologies such as telephone interviews, mail-in questionnaires, and Internet-based kiosks found a high dropout rate in the technology groups, but also hinted that the home-based methods were time-efficient despite requiring more training in the beginning (see Sano et al., 2010). Since then, technology has become simpler, with tablets now quite user-friendly. To see if these devices could collect accurate data at home, Dorene Rentz of Massachusetts General Hospital, Boston, enrolled 39 cognitively healthy, highly educated participants with an average age of 72.

First, participants came to the clinic to take the paper-and-pencil version of the ADCS-PACC composite that is being used in A4 (see Jun 2014 news story and Part 3 of this series). At this visit, they also took version A of a computerized cognitive composite (C3) on an iPad. The C3 includes four tests from the Cogstate battery, as well as two novel episodic memory tests: a face-name associative memory task and a pattern-separation task. The C3 is also being explored in A4, but with staff supervision, not home-based. This study validated the home setting against clinic testing. At the end of the visit, participants took home the iPad, an instruction manual, and a phone number to call if they needed help. Over the next five days, they had to complete one alternate version of the C3 each day. At the end of the week, participants returned to the clinic to take version A of the C3 again.

How well did they do? Sixty-two percent completed all five tests correctly, and 95 percent completed four of five properly, Rentz reported. The biggest problem people had was taking the wrong version of the C3; most often retaking version A at home. Scores on different versions of the C3 agreed extremely well, indicating that these tests were equivalent, Rentz said. C3 scores from home and the clinic matched as well, with a correlation of 0.7, demonstrating that where someone took the test made no difference. The paper-and-pencil PACC results also correlated significantly with the C3. The statistical analysis was done with Samantha Burnham at CSIRO Computational Informatics in Floreat, Australia.

These results suggest that at-home tablet testing could be incorporated into trials, Rentz said. Next, she wants to see whether cognitively impaired people can complete this task, perhaps with help from a study partner. She will also try to set cutoff scores for normal versus impaired users, and study larger cohorts. Meanwhile, Cogstate is improving its program to help users select the proper test each time, Rentz said, and electronic fingerprinting as used in new mobile phones may help ascertain that it’s actually the proband, not the partner, who takes the test at home.

Rentz’s talk was enthusiastically received. Some scientists said they are setting up similar systems of home-based iPad tests where results are downloadable to the clinic; others emphasized that once the process is fully validated, being able to obtain much more frequent testing than is feasible with clinic visits will greatly improve the quality of cognition tracking. Steve Ferris of New York University Langone Medical Center noted that home-based iPad tests will be particularly useful in prevention trials of lifestyle interventions, which require large numbers of participants but far fewer clinic visits than trials of an invasive drug such as an antibody.

Can home-based tests also stage dementia severity? On a poster at CTAD, James Galvin of NYU Langone noted that gold-standard assessments for staging dementia, such as the CDR, require a trained clinician and take at least half an hour to complete. They work in trials, but not to screen large numbers of people. On the other hand, quick screening tests such as the AD8 stage disease poorly (see May 2007 Webinar). To find a middle ground, Galvin developed the Quick Dementia Rating System (QDRS). This questionnaire takes five minutes for either the patient or a caregiver to complete. It could be given over the Internet or taken with paper and pencil at home, Galvin said. The QDRS comprises 10 questions measuring domains such as memory, problem-solving, mood, and language, with scores ranging from zero for a cognitively normal person to 30 for severe impairment. The four cognitive and six behavioral domains match up closely with those measured by the CDR.

In initial testing in a cohort of 736 dementia caregivers, QDRS scores correlated highly with traditional rating scales, including measures of quality of life and caregiver burden. Galvin and colleagues then launched a validation study of the screen in 259 patient-caregiver pairs who get care at NYU. Caregivers filled out the QDRS at home before their clinic visit and dropped the form off as they came in. A blinded clinician then did a formal CDR, and Galvin compared how the two matched up. QDRS scores climbed with increasing CDR stage, with a correlation of r=0.73 between the two measures, the scientists reported at CTAD. In reliability testing, the QDRS had an ICC score of 0.93 with the CDR-sum of boxes. “By looking at the QDRS, I know what the person’s CDR-SB will be,” Galvin told Alzforum. The QDRS discriminated well between cognitively healthy people and those with mild cognitive impairment, Galvin reported. It also correlated with the Neuropsychiatric Inventory and Mini Mental State Exam.

The screen could be useful both to help recruit people for trials and for home-based assessment in longitudinal studies, Galvin suggested. He next plans to measure how people’s scores change over time. Like the CDR, the QDRS does not identify what disease underlies a person’s score—it could be AD, dementia with Lewy bodies, or something else.—Madolyn Bowman Rogers

References

News Citations

- CTAD Shows Alzheimer’s Field Trying to Reinvent Itself

- Brain Training Database: Treasure Trove for Preclinical Alzheimer’s Research?

- Test Battery Picks Up Cognitive Decline in Normal Populations

- From Shared CAP, Secondary Prevention Trials Are Off and Running

Webinar Citations

Paper Citations

- Sano M, Egelko S, Ferris S, Kaye J, Hayes TL, Mundt JC, Donohue M, Walter S, Sun S, Sauceda-Cerda L. Pilot study to show the feasibility of a multicenter trial of home-based assessment of people over 75 years old. Alzheimer Dis Assoc Disord. 2010 Jul-Sep;24(3):256-63. PubMed.

External Citations

Further Reading

News

Annotate

To make an annotation you must Login or Register.

Comments

Screen, Inc.

Dr. Rentz found that 62 percent of her "cognitively healthy, highly educated participants with an average age of 72" completed all tests successfully at home and that "scores from home and the clinic matched as well, with a correlation of 0.7, demonstrating that where someone took the test made no difference." Not only does 0.7 not correlate that strongly, but the use of very highly educated volunteers in a university setting is not likely to clarify usability problems. As with the CAMCI tablet tests, which require stylus typing on an on-screen keyboard, once you test people who are less educated (and less likely to be familiar with tablets) you encounter more anxiety and confusion that interfere with the reliability of measurements. When we tested the CANS-MCI in a variety of primary care offices, we had to make a number of major changes to increase the range of patients who could take the tests reliably. This delayed commercial availability by several years; it’s no simple fix, even for very early detection.…More

I agree that "we need better ways of cheaply tracking cognition over multi-year trials." The home tablet method is probably not the way to go. There are reliable, longitudinally valid test batteries that can be set up by any staff member at a primary care or other health facility and economically self-administered by a very wide range of patients in those controlled environments. We have numerous case studies in which the presence of a spouse or other distractions in the testing room has a huge effect upon the scores.

Disclosure: Emory Hill is founder of Screen, Inc., creators and commercial distributor of the CANS-MCI touch screen test battery for detecting changes from normal functioning to mild cognitive impairment.

Make a Comment

To make a comment you must login or register.